Authors

Yiming Zhang, Dongning Guo

Abstract

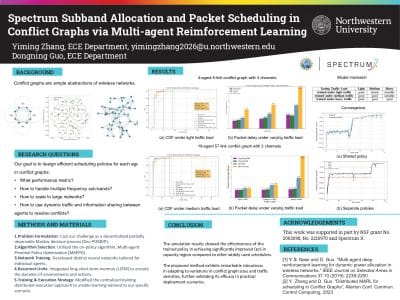

This work introduces a novel approach to scheduling in conflict graphs by leveraging a fully scalable multi-agent reinforcement learning (MARL) framework. The objective is to minimize average delays in the presence of stochastic packet arrivals to links, where scheduling conflicts arise if two vertices (or links) are assigned to the same channel in the same time slot.

The problem is formulated as a decentralized partially observable Markov decision process (Dec-POMDP), with the implementation of the multi-agent proximal policy optimization (MAPPO) algorithm to optimize scheduling decisions. To enhance performance, advanced recurrent structures in the neural network are integrated.

The proposed MARL solution allows for both decentralized training and execution, facilitating seamless scalability to large networks. Extensive simulations conducted across diverse conflict graphs demonstrate the superior performance of the approach in terms of throughput and delay compared to established schedulers under varied traffic conditions.