Authors

Kun Yang, Shu-ping Yeh, Menglei Zhang, Jerry Sydir, Jing Yang, and Cong Shen

Abstract

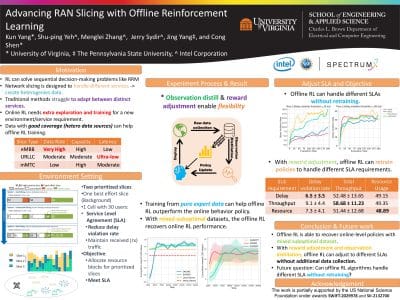

Dynamic radio resource management (RRM) in

wireless networks presents significant challenges, particularly

in the context of Radio Access Network (RAN) slicing. This

technology, crucial for catering to varying user requirements,

often grapples with complex optimization scenarios. Existing

Reinforcement Learning (RL) approaches, while achieving good

performance in RAN slicing, typically rely on online algorithms

or behavior cloning. These methods necessitate either continuous

environmental interactions or access to high-quality datasets,

hindering their practical deployment. Towards addressing these

limitations, this paper introduces offline RL to solving the RAN

slicing problem, marking a significant shift towards more feasible

and adaptive RRM methods. We demonstrate how offline RL can

effectively learn near-optimal policies from sub-optimal datasets,

a notable advancement over existing practices. Our research

highlights the inherent flexibility of offline RL, showcasing its

ability to adjust policy criteria without the need for additional

environmental interactions. Furthermore, we present empirical

evidence of the efficacy of offline RL in adapting to various

service-level requirements, illustrating its potential in diverse

RAN slicing scenarios